20 Newsgroup data - Text Classification

Text classification for 20 newsgroup data

Text

classification is a way to assign predefined labels to text. Text classifiers can be used to organize, structure, and

categorize any kind of text – from documents, files, and all over the web.

Data set:

The 20 Newsgroup data is the

collection of approximately 20,000 newsgroup documents, divided into 20

different newsgroups. The dataset

contains 20 files one document from each group. The 20 newsgroups the collection has become a popular data set for experiments in text applications

of machine learning techniques, such as text classification and text

clustering.

The groups

are:

•

comp.graphics

•

comp.os.ms-windows.misc

•

comp.sys.ibm.pc.hardware

•

comp.sys.mac.hardware

•

comp.windows.x rec.autos

•

rec.motorcycles

•

rec.sport.baseball

•

rec.sport.hockey sci.crypt

•

sci.electronics

•

sci.med

•

sci.space

•

misc.forsale talk.politics.misc

•

talk.politics.guns

•

talk.politics.mideast talk.religion.misc

•

alt.atheism

•

soc.religion.christia

Project code:

Importing the necessary

libraries numpy, pandas, seaborn, and matplotlib for data processing and

visualization.

For the data set, I have

imported the file from sklearn datasets which has some most commonly used

datasets in machine learning using fetch feature.

After loading the

dataset, I have divided and loaded the train and test data.

After loading the data as train and test data, I Displayed all the News Groups in the dataset as categories.

To visualize the data I have used len

function to see the train and test data.

After that, I have calculated the frequency

of words in both train and test data and plotting the frequency and its

corresponding News Group category for the train and test data set

Graph for the frequency of words vs

categories(Newsgroups) in Training Data set:

Similarly for test data:

Graph for the frequency vs newsgroups in

train data set:

Data Pre-processing:

It is one of the most important steps in

data mining. It makes the dataset better and usable.

For Preprocessing, I flattened both the train data and test data from a 2D array into a 1D array.

Classification:

There are several machine learning

text classification algorithms. In this project I tried to implement 4

algorithms and see how it works for our dataset.

Algorithms used:

1) Naïve Bayes: Naïve Bayes is one of the most used algorithms

in text classification and text analysis. Naive Bayes is based on Bayes’s Theorem, which

helps us compute the conditional probabilities of the occurrence of two events,

based on the probabilities of the occurrence of each individual event. So we’re

calculating the probability of each tag for a given text, and then outputting

the tag with the highest probability.

The probability of A, if B is true, is equal

to the probability of B, if A is true, times the probability of A being true,

divided by the probability of B being true.

This means that any vector that represents a

text will have to contain information about the probabilities of the appearance

of certain words within the texts of a given category, so that the algorithm

can compute the likelihood of that text’s belonging to the category.

Classification report for Naive bayes:

Actual target values and predicted values:

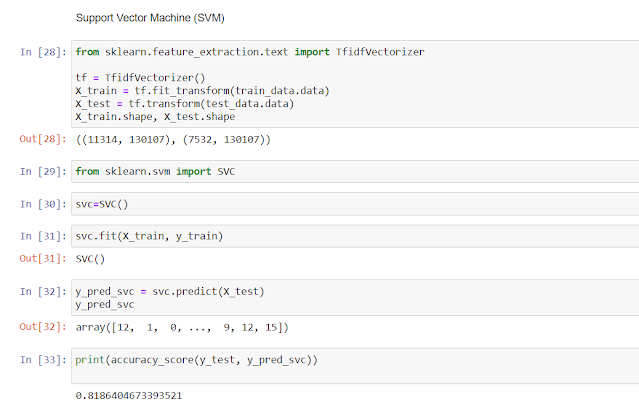

2)Support

Vector Machines(SVC)-Support Vector Machines (SVM) is another powerful text classification

machine-learning algorithm, because like Naive Bayes, SVM doesn’t need much

training data to start providing accurate results.

SVM draws a line or “hyperplane” that divides a space into two subspaces. One subspace contains vectors (tags) that belong to a group, and another subspace contains vectors that do not belong to that group. Those vectors are representations of your training texts, and a group is a tag you have tagged your texts with.

3)K

Nearest Neighbour’s(knn)- KNN algorithm that is we consider the K-Nearest Neighbors of

the unknown data we want to classify and assign it the group appearing majorly

in those K neighbors. this is the main idea of this simple supervised learning classification

algorithm.

The major problem in classifying texts is that they are a mixture of characters and words. We need a numerical representation of those words to feed them into our K-NN algorithm to compute distances and make predictions. One way of doing that numerical representation is a bag of words with tf-idf(Term Frequency - Inverse document frequency).We will have a feature vector of unlabeled text data and its distance will be calculated from all these feature vectors of our data set. Out of them, K-Nearest vectors will be selected and the class having maximum frequency will be labeled to the unlabeled data

For the K nearest neighbor classification I have taken the k value as 3.

4)

Logistic Regression- Logistic regression is used to describe data

and to explain the relationship between one dependent binary variable and one

or more nominal, ordinal, interval, or ratio-level independent variables.

I

have fitted the model and calculated the classification report and confusion

matrix for all the algorithms.

Result:

As

we see logistic regression has the highest accuracy i.e., it performs better classification

for the given text data.

Contribution:

I

initially started with an idea to perform classification using any one machine

learning algorithm but while I started exploring the data I wanted to see how this

data performs for different algorithms and hence I implemented 4 different classification

algorithms. I tried using functions like count vectorizer and tf-idf for

pre-processing of the data.

Challenges

Faced and Experiments:

Understanding

the data set and the initial proceedings were a bit difficult. I read a lot

about text classification and what machine learning algorithms can be used for

machine learning. Once I found the initial structure of the model the next task

was to implement it. When it comes to text the main problem was tokenizing and

vocab building but I later read about count vectorizer and tried to implement it in my model. For implementation, the main problem was how I can clearly state

my results and then I used a classification report and confusion matrix. I also

showed predicted results vs actual results which gives us an even better

understanding of the model.

GUI Sketch:

Initial page

Result:

Project Proposal: https://drive.google.com/file/d/1h6OJOT2lglHRpNxxqkQNuH-E6tP_aHNl/view?usp=sharing

GUI Sketch: https://drive.google.com/file/d/17UBGUto73jhQNuyvOvPkWatubMrth5fC/view?usp=sharing

Notebook: https://colab.research.google.com/drive/1ZMtVzIxe2ZpNgKWppNH8CLPHLQdPwj_R?usp=sharing

Youtube Video Link: https://youtu.be/cmgYYlZroGc

Data Set: https://www.kaggle.com/crawford/20-newsgroups

References:

https://iq.opengenus.org/text-classification-using-k-nearest-neighbors/

https://monkeylearn.com/text-classification/

https://towardsdatascience.com/logistic-regression-detailed-overview-46c4da4303bc

Comments

Post a Comment